In the ~10 years of my career that I dedicated to testing, I developed a set of testing best practices. These best practices assume working within an environment with automated baseline testing, but many apply to manual and traditional test framework testing.

Here are some of the best practices I've picked up over time:

Breadth over Depth

Generally, focus on broad coverage before edge cases. Possible counterpoint: prioritize coverage of known-buggy, historically problematic areas.

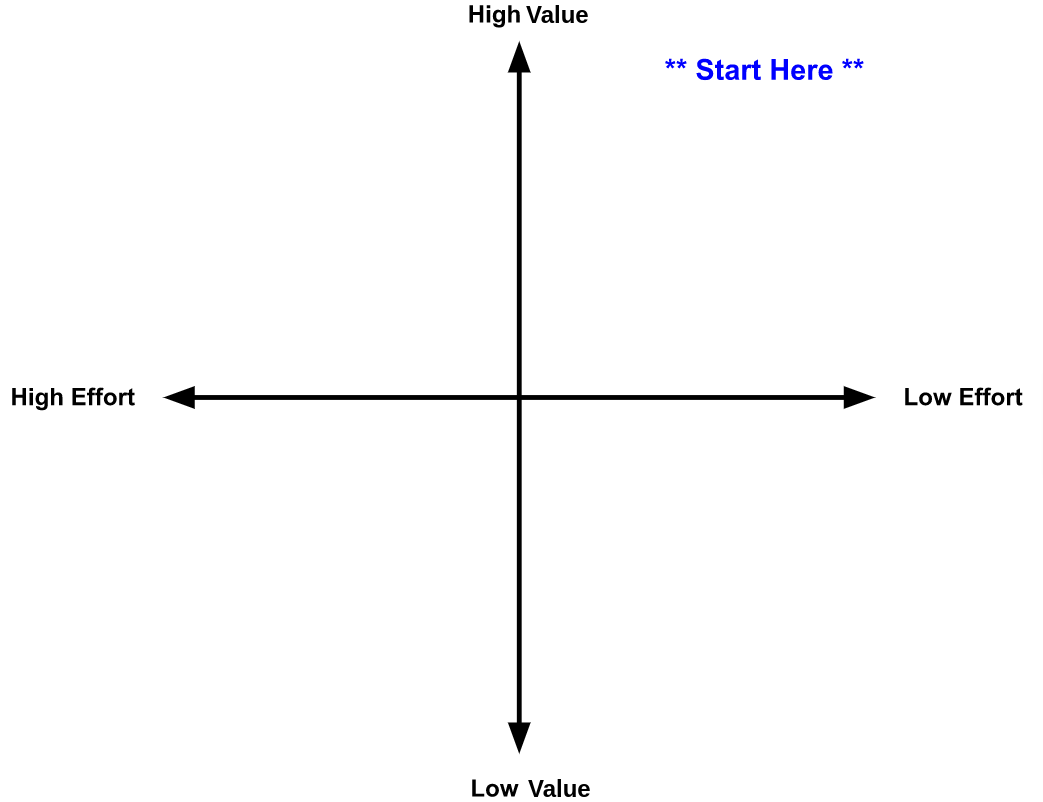

Prioritize by High-ROI

Prefer covering easy-to-test features of high value over difficult-to-test features of low consequence. This should be obvious, but the point of this is to think about tests explicitly in terms of value vs. effort.

How do you know what's high value? Tests of essential functionality (e.g. logging in, creating new accounts, essential happy-path product tests), and tests of functionality of historically-problematic/fickle product features.

Break it Down

Prefer many small, granular tests over few large, all-encompassing tests. Granular tests are easier to debug, and potentially much more informative when they fail over a monolithic test. When a single, granular test fails, you'll know right where to focus your energy.

Counterpoint: sometimes setup is so expensive that it's cost-prohibitive to test granularity. In this case, consider bringing the environment setup into the framework outside of the test cases.

Make Readable Test Output

Investigating failures is easier with intent and context embedded in the test output. It might be really helpful to know details about what's being tested and why. Include context, links to bugs/tickets, and any other information that will help you or another future test debugger understand your tests.

For baseline testing: if it's good enough for a comment, it's probably good enough to be included in the baseline context.

Describe First, Then Judge

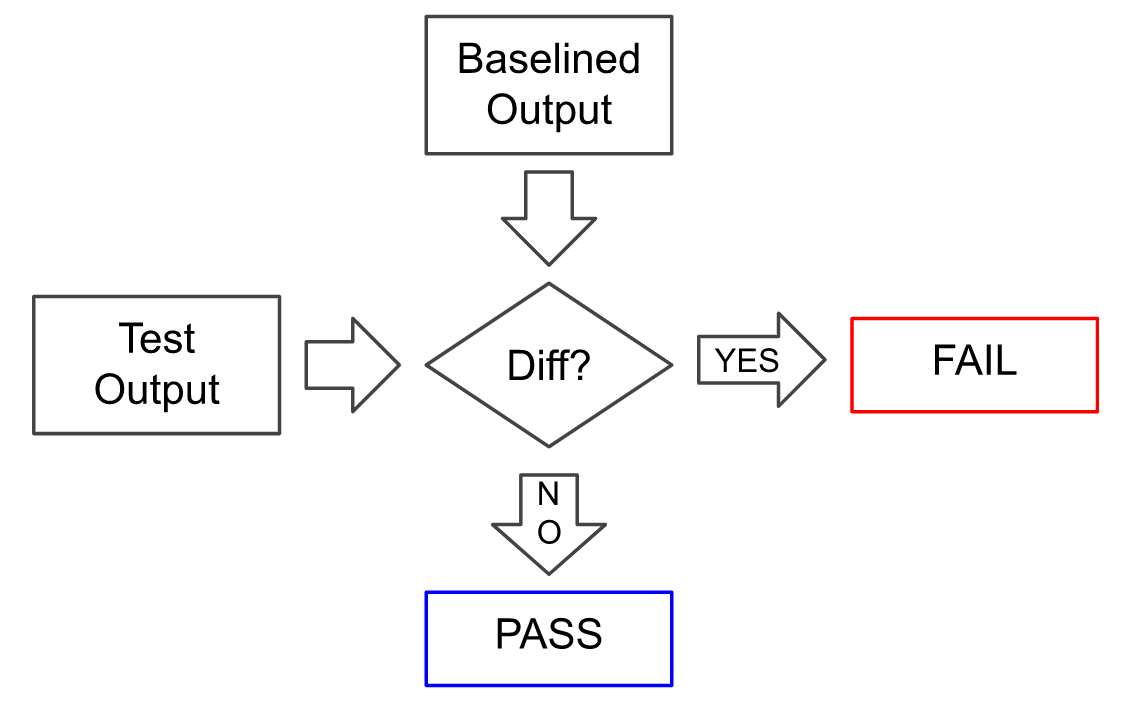

When creating baseline tests, capture the behavior of the system being tested first. Once you've captured behavior, carefully inspect the output before adding the captured behavior to the automated tests.

This could also be described as: document how the system behaves, then evaluate it for correctness.

Hastily Create Tests; Carefully Inspect Output

It's easy to miss subtly-incorrect behavior with baseline tests, so inspect it carefully. Help yourself by crafting the test output to make it easier to inspect (e.g., by adding context).

This is related to getting breadth first, but is also related to a mindset shift in baseline testing from a traditional test framework approach.

Don't Log the World

Log verbosely only in the dedicated tests of behavior. Too much unrelated baseline output can bury the signal you want in a test case. Example: if most test cases need to log in before the test scenario begins, verbosely logging sign-in behavior is redundant if done in every test case that needs to log in. Only verbosely log sign-in behavior in the granular tests dedicated to sign-in scenarios.

Avoid the Science Fair Project

Automation efforts should begin showing results quickly. This means catching bugs/behavior regressions quickly, and catching bugs that wouldn't have been caught if the automation hadn't been there.

This contrasts with the "science fair project" that I've observed several times when joining an existing, under-performing test team. You might have a "science fair project" if:

- When the automation fails, the supporting teams presume a failure with the test cases, not a regression in the product (low "signal-to-noise")

- There's no evidence that automation ever catches bugs

- Coverage is shallow and difficult to extend

- There are single points of failure on a team of testers for running or investigating test failures

- Supporting teams (developers, PMs, other testers, etc) can see that the tests failed, but they have no ability to explain what failed or why

A very smart development manager I once worked with used to opine: "If you want more tests, make it easy to write tests". Tests should be as uncomplicated as possible. They should be high-visibility. They should be informative. Demonstrate your cleverness by how simple you can make the tests, not by making them complicated.